Welcome to the ALR-Lab

The Autonomous Learning Robots (ALR) Lab at the Institute for Anthropomatics and Robotics of the Department of Informatics, focuses on the development of novel machine learning methods for robotics.

News:

We’re excited to announce the launch of the brand-new website of the Autonomous Learning Robots (ALR) group at the Karlsruhe Institute of Technology (KIT)!

🌐 Visit us at: https://alr-kit.de/

more

We present PointMapPolicy, a diffusion-based imitation learning framework that processes 3D point clouds as structured 2D grids without downsampling. By representing depth as regular arrays of XYZ coordinates, we enable direct application of ResNet, ConvNeXt, and ViT architectures while preserving geometric precision. Combined with xLSTM backbones for efficient multi-modal fusion, our method outperforms existing RGB and 3D approaches on challenging manipulation benchmarks.

more

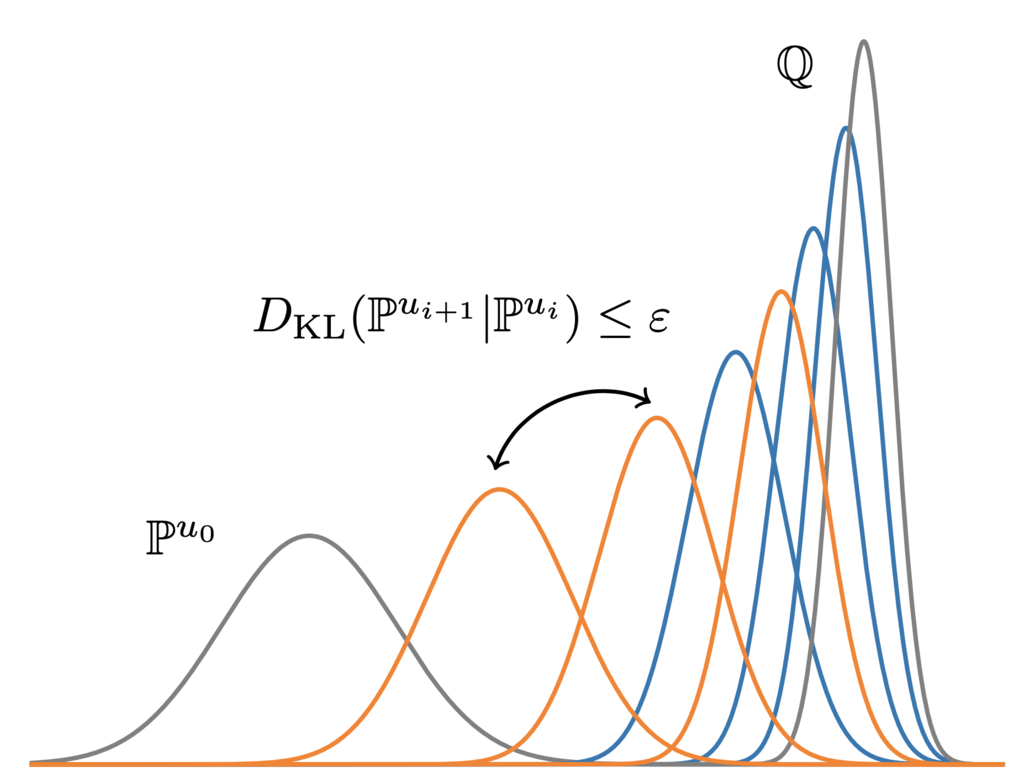

Solving stochastic optimal control problems with quadratic control costs can be viewed as approximating a target path space measure, e.g. via gradient-based optimization. In practice, however, this optimization is challenging in particular if the target measure differs substantially from the prior. In this work, we therefore approach the problem by iteratively solving constrained problems incorporating trust regions that aim for approaching the target measure gradually in a systematic way.

more

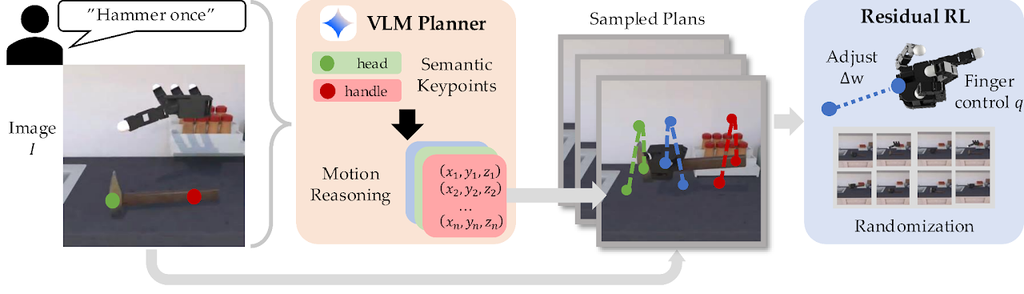

We propose a method for training dexterous robotic hands using vision-language models to generate task-relevant 3D trajectories. Our system identifies key points in a scene and creates coarse motion trajectories for the hand and objects. We then train a residual reinforcement learning policy to follow these trajectories in simulation. This approach enables the learning of robust manipulation policies that transfer to real-world robotic hands without the need for human demonstrations or custom rewards.

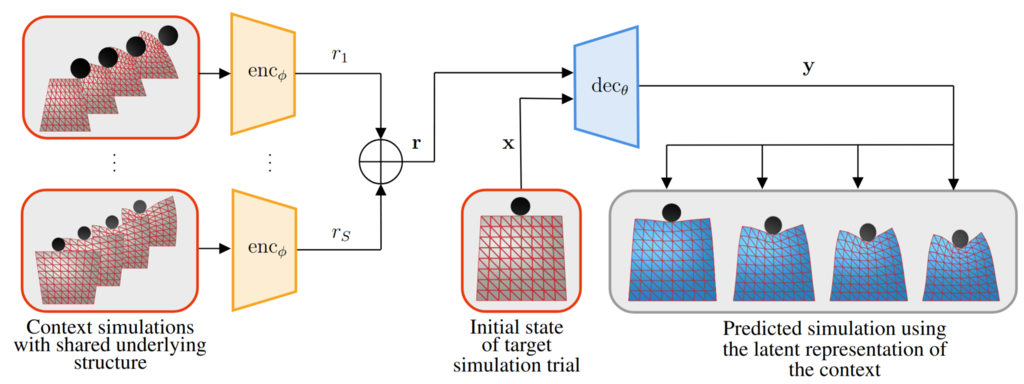

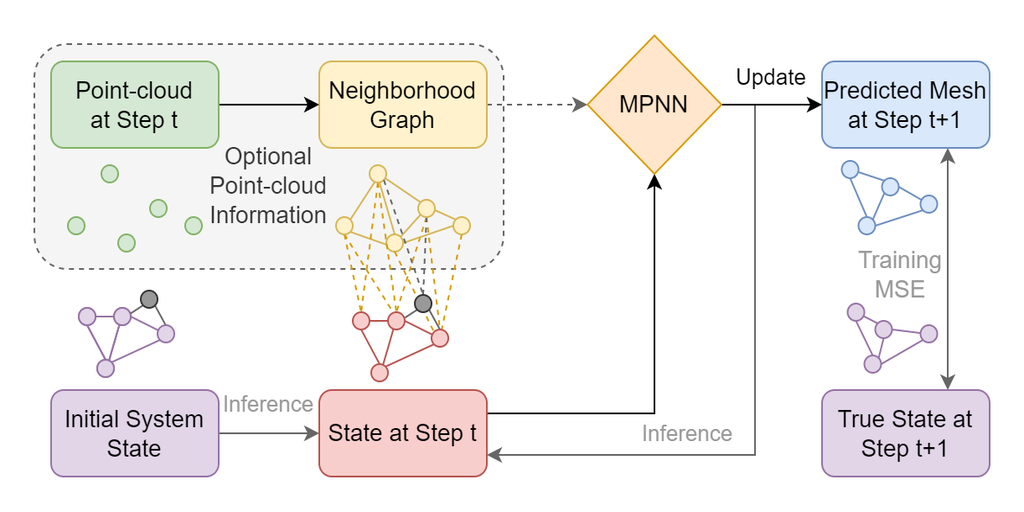

Meta Neural Graph Operator (MaNGO) enables adaptable, data-driven physical simulation through meta-learning. It learns latent physical parameters from related simulations using a Conditional Neural Process (CNP), encoding them into a compact representation that guides prediction. A spatiotemporal encoder processes evolving graph sequences via temporal convolutions and Deep Set aggregation, while the neural-operator-based decoder predicts full trajectories in one forward pass through alternating spatial message passing and temporal convolution, achieving fast and stable simulations across diverse physical systems.

more

Graph-based learned simulators enable fast and accurate physical simulation on unstructured meshes but struggle with global effects and long-term simulations. Rolling Diffusion-Batched Inference Network (ROBIN) addresses these issues through two innovations. A novel parallel inference scheme for diffusion-based simulators overlaps denoising across time steps to amortize computational cost, while a Hierarchical Graph Neural Network based on algebraic multigrid coarsening allows multiscale message passing across mesh resolutions.

more

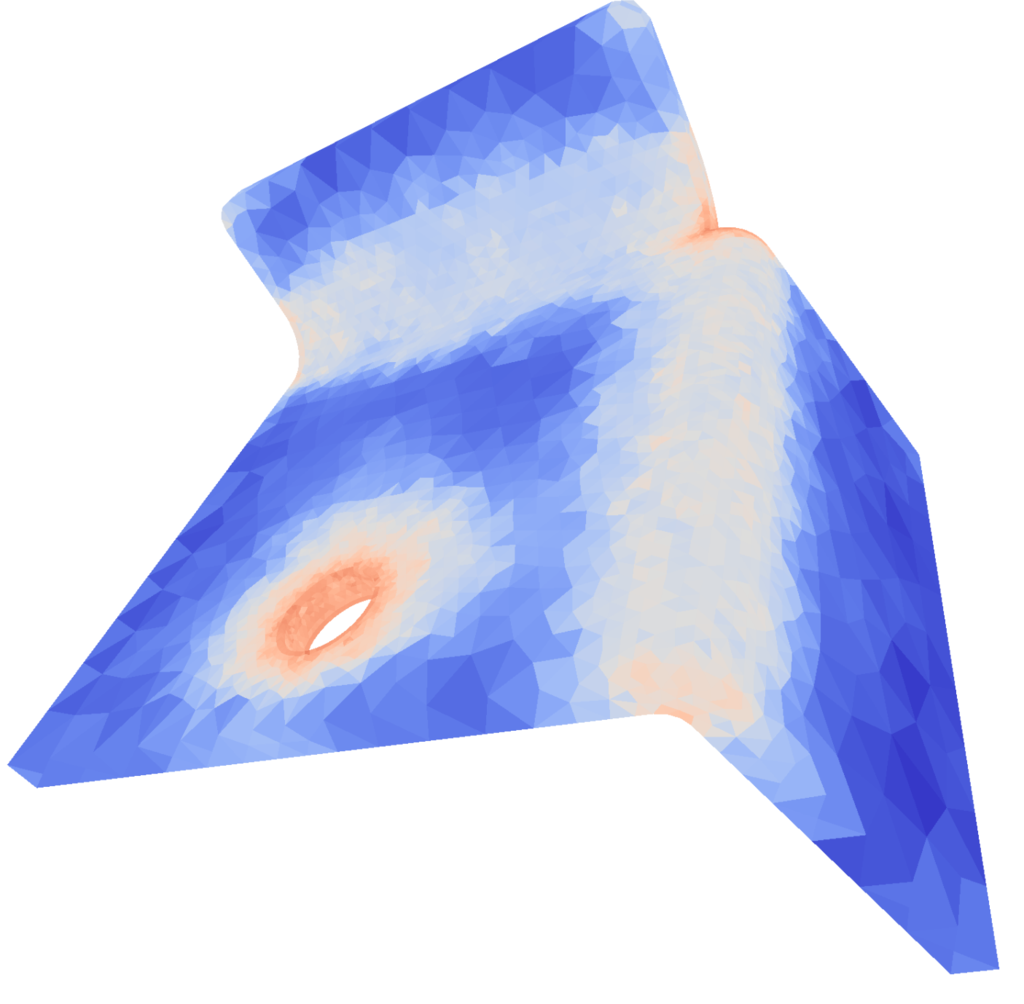

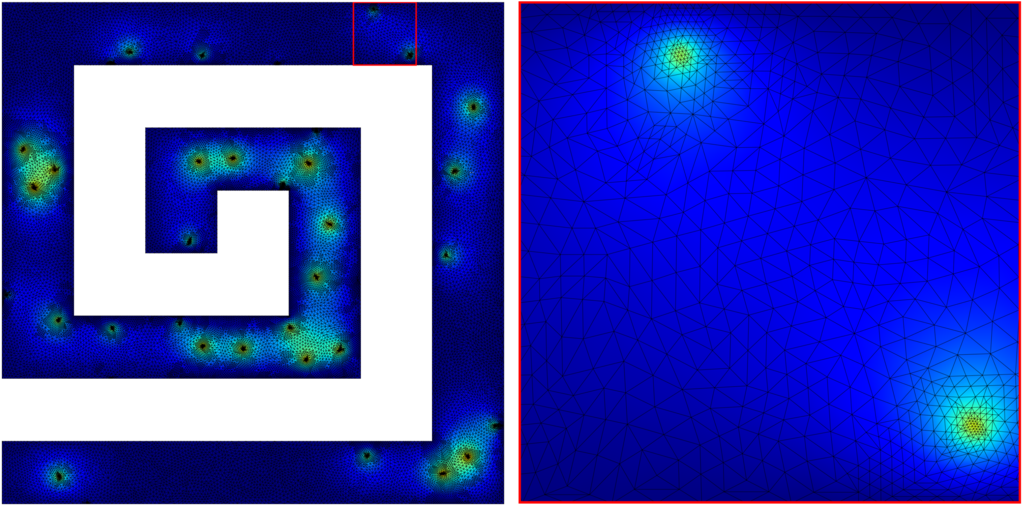

Adaptive meshes allow the efficient simulation of physical systems by allocating computational resources at the important parts of the simulated domain. Adaptive Meshing By Expert Reconstruction (AMBER) frames adaptive mesh generation as a supervised learning task with two stages. First, a graph neural network predicts a spatially varying scalar sizing field. Second a non-parametric generator produces the mesh from this field. AMBER refines the sizing field iteratively, starting from a coarse uniform mesh. From the corresponding graph, it predicts vertex-level target element sizes, which are interpolated into a smooth continuous field to guide the generation of an adaptive mesh.

more

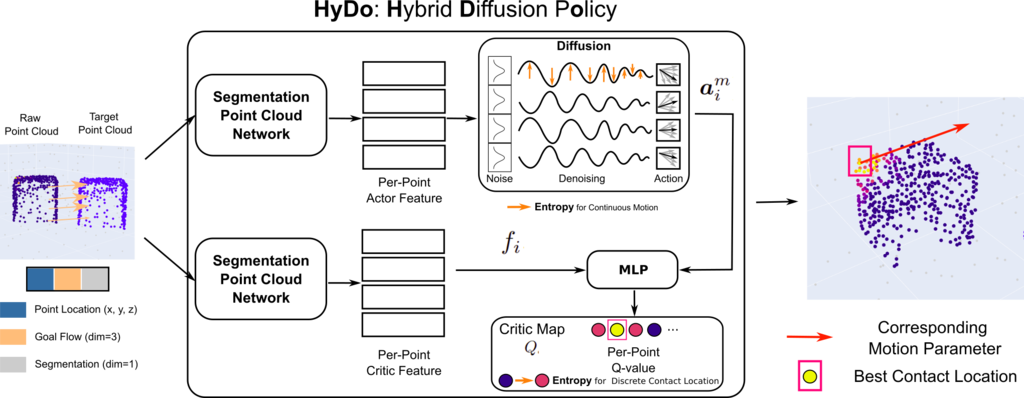

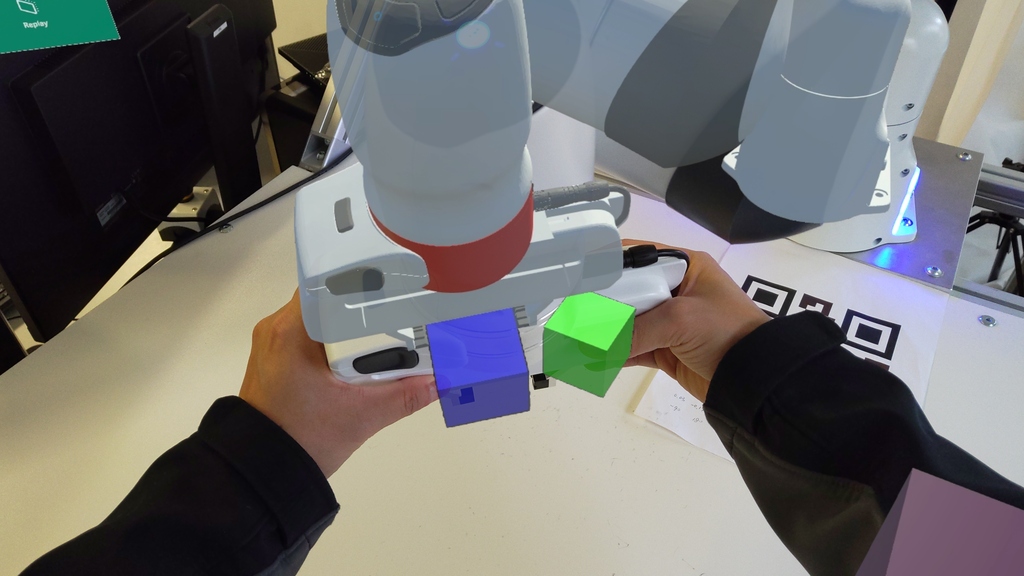

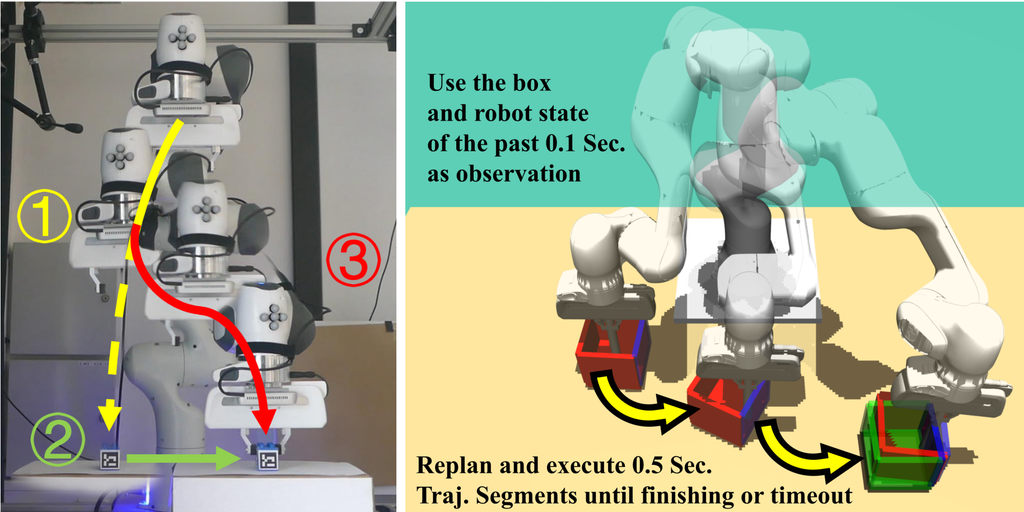

We introduce HyDo, a two-fold Hybrid Diffusion Policy that unifies discrete contact selection and a diffusion-based continuous motion policy within a maximum-entropy RL framework derived through structured variational inference. This hybrid exploration strategy promotes diverse non-prehensile manipulation tasks and improves 6-DoF pose-alignment success from 53 % to 72 %, transferring zero-shot from simulation to the real robot.

more

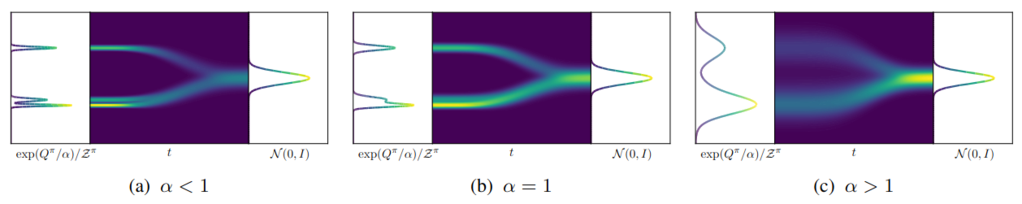

Diffusion models have proven beneficial in representing highly complex and multimodal distributions in high-dimensional spaces, but lack tractable statistics such as the marginal entropy which is required in many reinforcement learning objectives. DIME proposes a tractable lower-bound to the maximum entropy reinforcement learning (MaxEntRL) objective, thereby enabling training of diffusion-based policies in the MaxEntRL setting. The results show that DIME outperforms all diffusion-based methods and performs comparably with SOTA Gaussian-based RL methods.

more

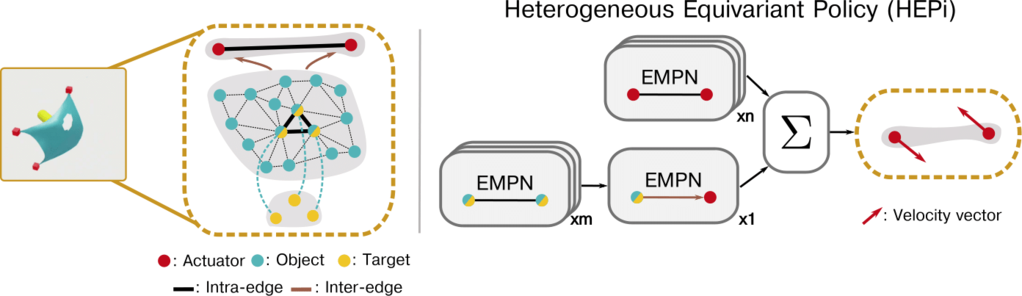

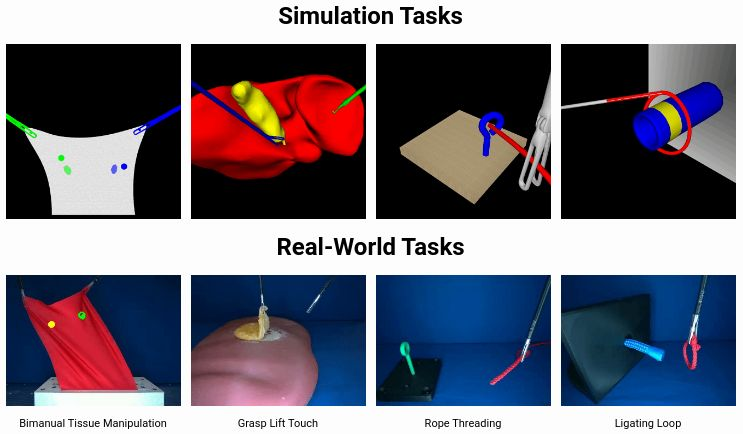

In this work, we introduce Heterogeneous Equivariant Policy (HEPi), an SE(3)-equivariant policy for manipulating diverse geometries and deformable objects, with the potential to extend to tasks involving multiple actuators. Trained with reinforcement learning, HEPi achieves higher average returns, improved sample efficiency, and better generalization to unseen objects compared to Transformer baselines.

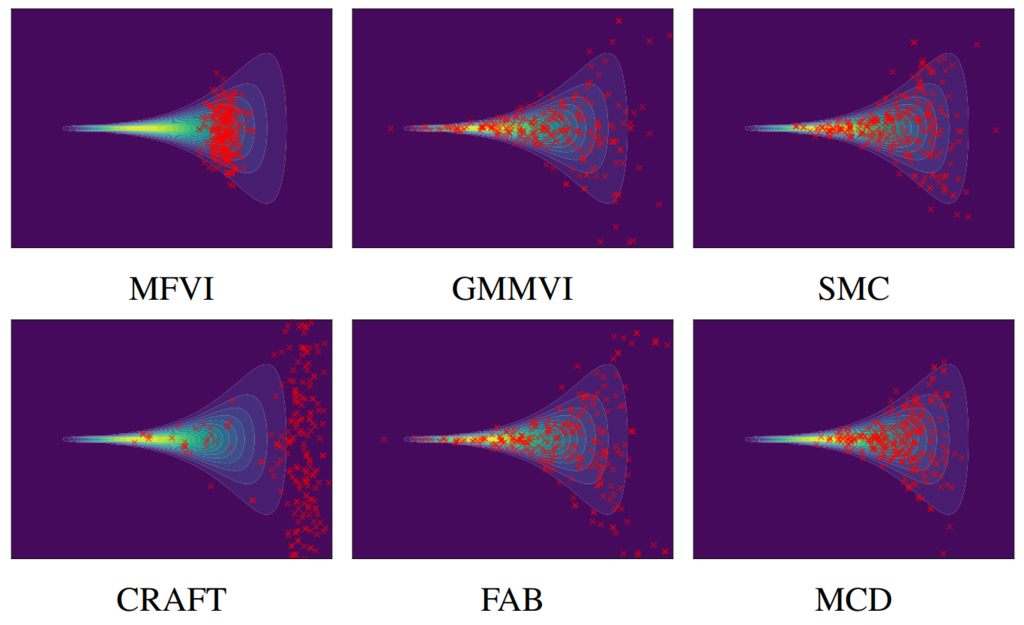

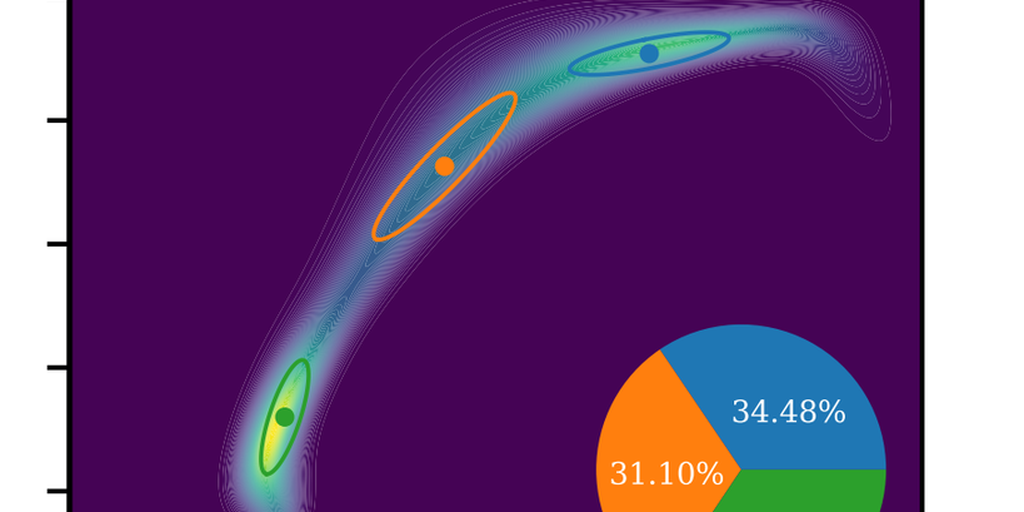

We propose end-to-end learnable Gaussian mixture priors (GMPs) for Diffusion-based sampling methods. GMPs offer improved control over exploration, adaptability to target support, and increased expressiveness to counteract mode collapse. We further leverage the structure of mixture models by proposing a strategy to iteratively refine the model by adding mixture components during training.

more

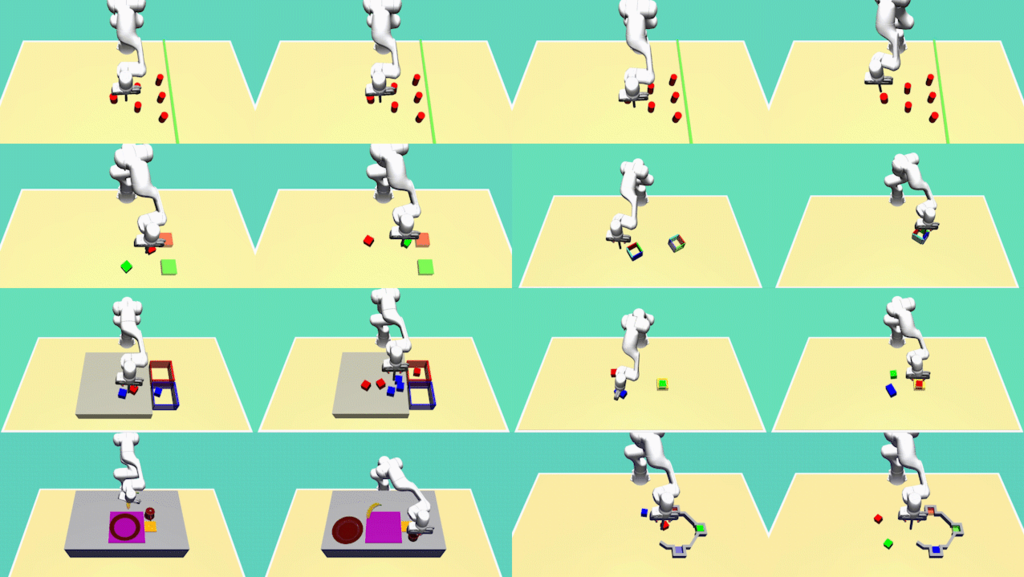

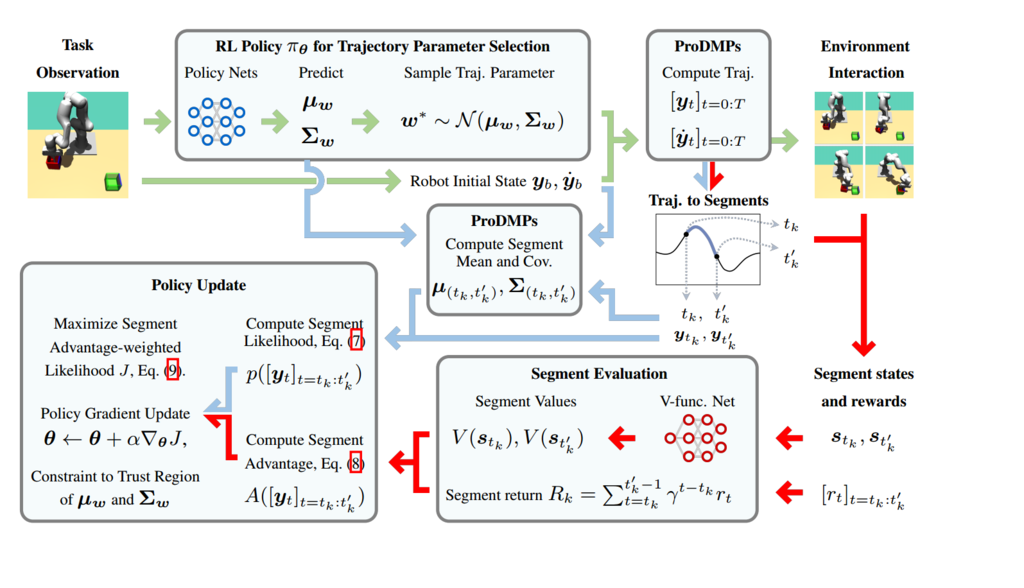

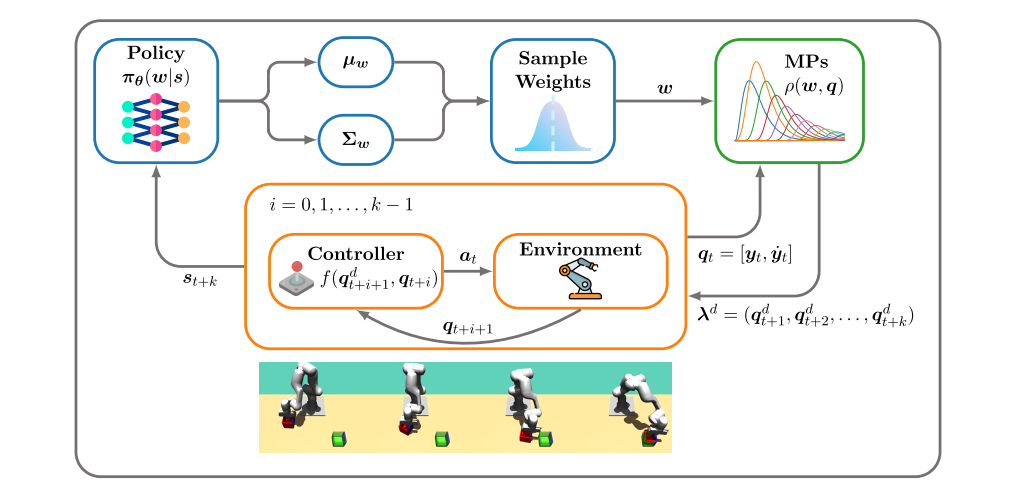

We introduce TOP-ERL, a novel RL algorithm that enables off-policy learning in episodic manner. We predict action trajectories and evaluate the values of consecutive actions using a transformer-based critic, making it highly-efficient and performant in robot RL.

more

Motivated by superior convergence properties and compatibility with sophisticated numerical integration schemes of underdamped stochastic processes, we propose underdamped diffusion bridges, where a general density evolution is learned rather than prescribed by a fixed noising process. We apply our method to the challenging task of sampling from unnormalized densities without access to samples from the target distribution.

more

One model generates grasps for a wide range of gripper architectures. In this work, we present a method and data-generation framework that generalizes grasp synthesis across multiple embodiments. The equivariant architecture provides efficient encoding and state-of-the-art data efficiency, enabling the method to adapt to previously unseen grippers

more

We are thrilled to share that our lab has been awarded an ERC Consolidator Grant for my project SMARTI³ – Scalable Manipulation Learning through AR-enhanced Teleoperation enabling Intuitive Interactive Instructions. We will investigate innovative AR interfaces and develop interactive robot foundational models that can react in real-time to user inputs comming from AR. Exciting times ahead!

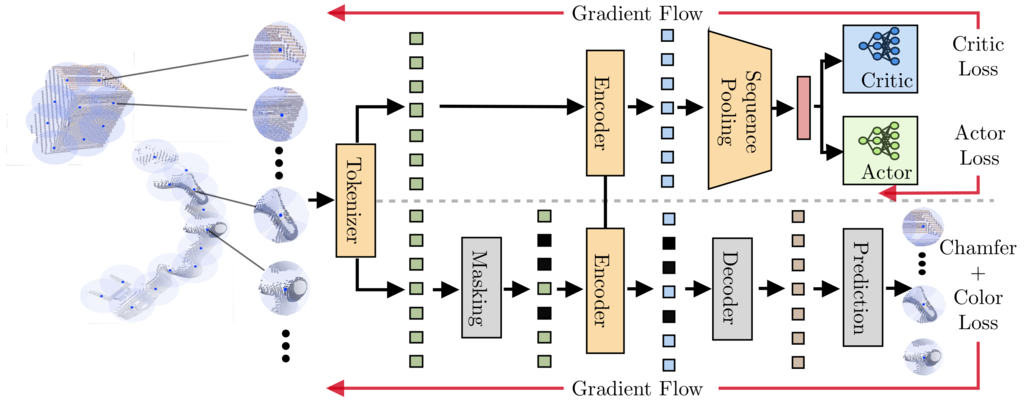

PointPatchRL is a method for Reinforcement Learning on point clouds that harnesses their 3D structure to extract task-relevant geometric information from the scene and learn complex manipulation tasks purely from rewards

more

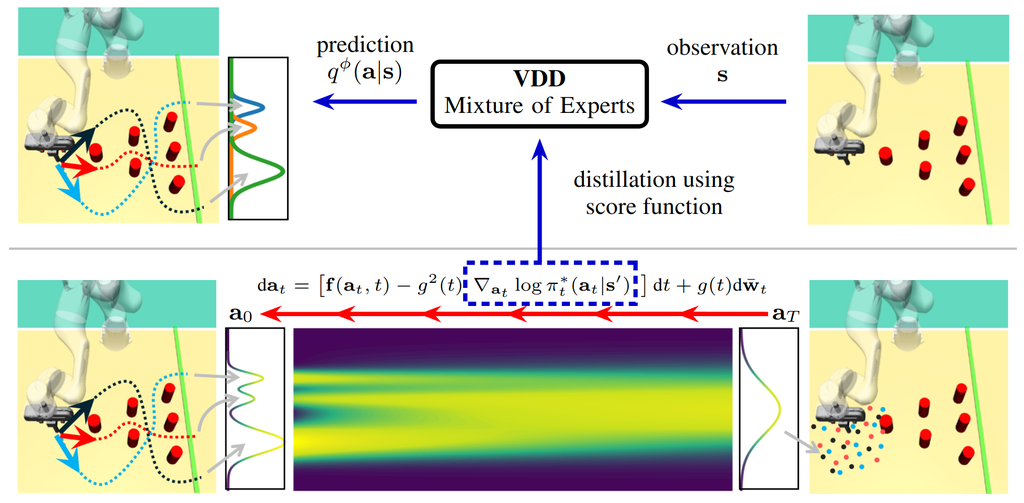

Is your diffusion model too slow for real-time control? Check out VDD, a novel diffusion model distillation method that distills diffusion models into a Mixture of Experts with single-step inference!

Black-box optimization solves complex problems with unknown objectives and no gradients. It is key in function optimization and episodic reinforcement learning. Traditional methods like CMA-ES struggle in noisy conditions due to ranking reliance. The MORE algorithm, based on natural policy gradients, optimizes expected fitness without ranking, showing improved performance on this challenging setup.

more

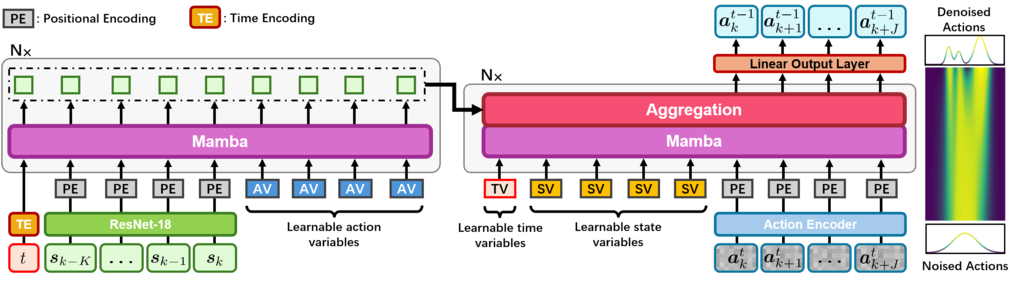

We introduced Mamba for Imitation Learning (MaIL), a robust and data-efficient alternative to transformer-based models

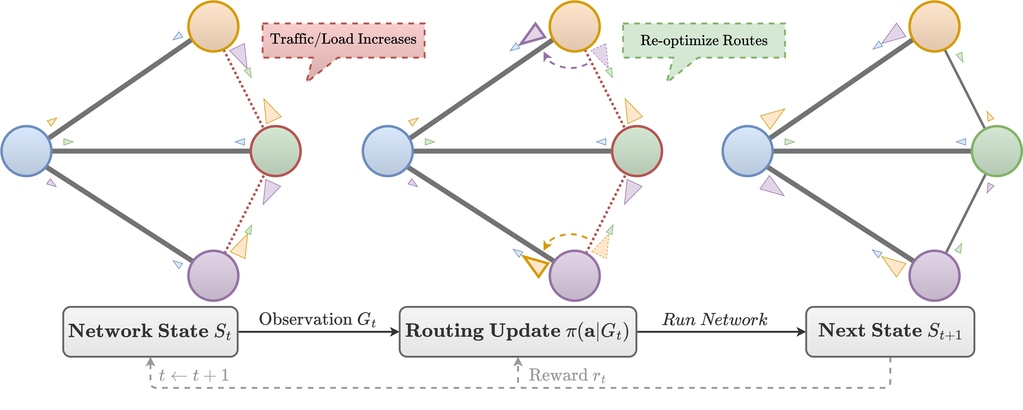

Looking to optimize routes quickly in computer networks? Don’t skip out on packet-level dynamics available through PackeRL, our new training and evaluation framework, and M-Slim and FieldLines, our new policy designs for sub-second routing optimization

more

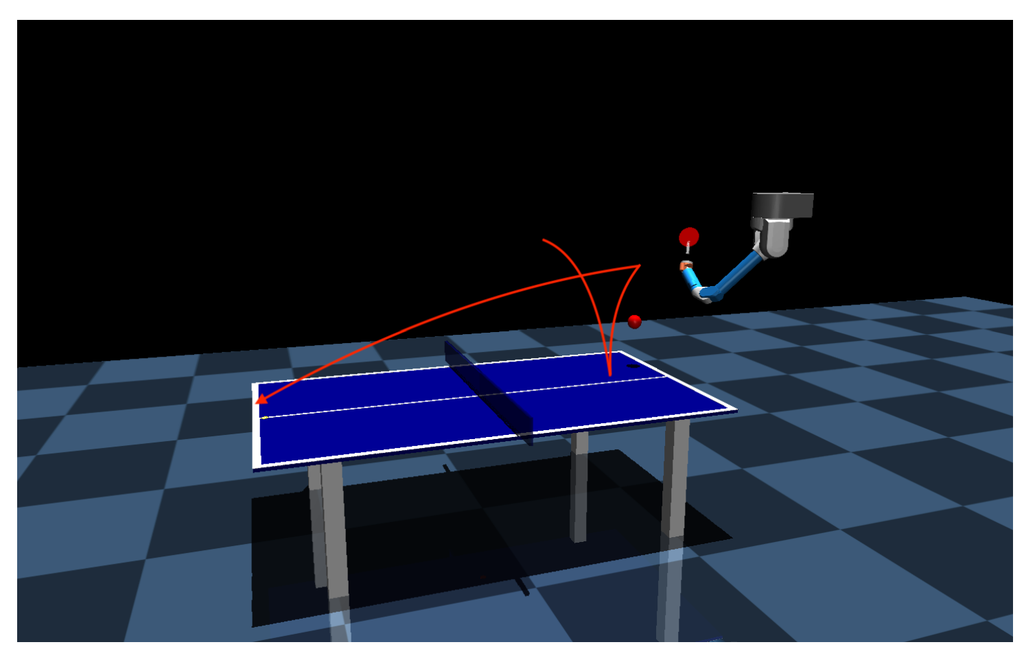

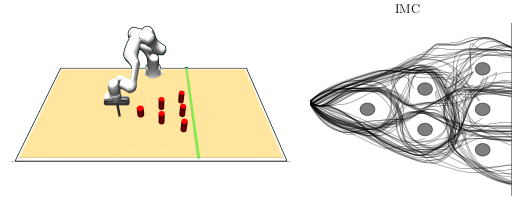

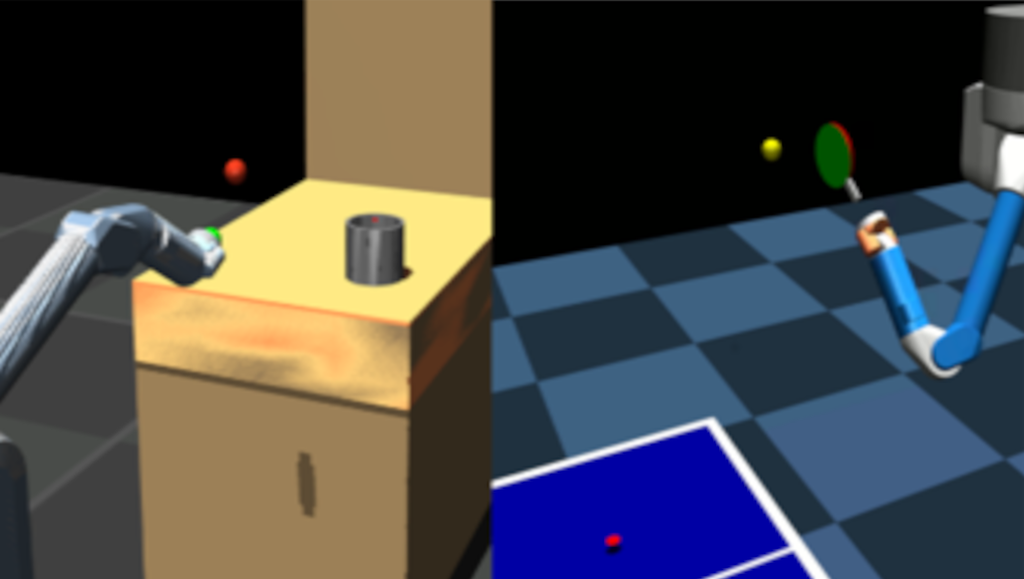

We propose Diverse Skill Learning (Di-SkilL), a novel Reinforcement Learning method for learning versatile skills to the same/or similar problem. The skills are formalized as motion primitives that are adjusted using an energy-based Mixture of Experts (MoE) policy allowing the policy to learn state of the art performing diverse skills for multi-modal robotic tasks such as table tennis.

more

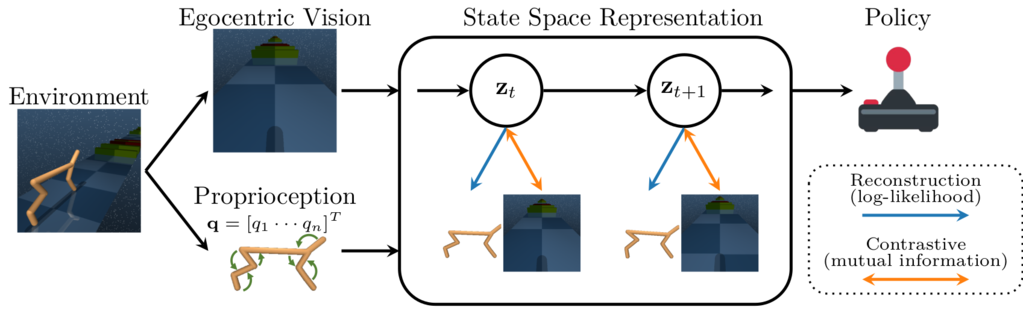

We propose a general framework for multimodal representation learning for RL, which allows tailoring self-supervised losses to each modality. We systematically show the benefits of this approach on a diverse set of tasks, featuring images with natural backgrounds, occlusions, and challenging sensor fusion in locomotion and mobile manipulation tasks.

more

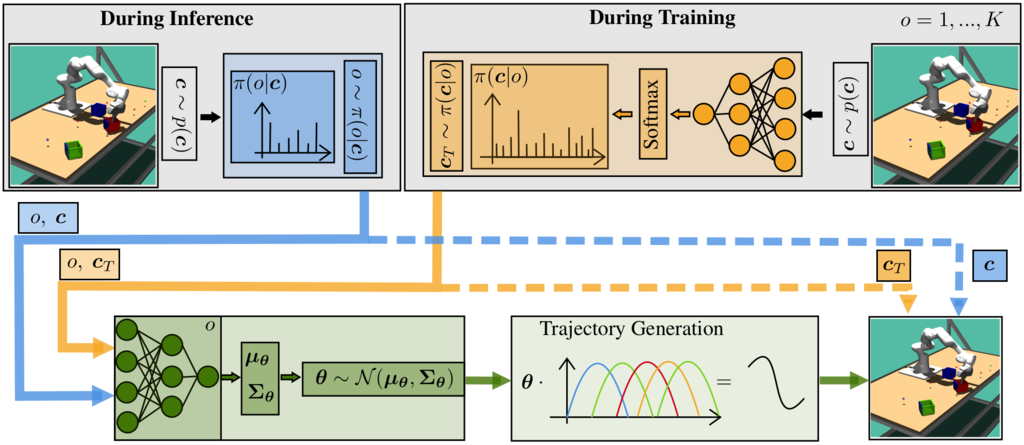

Movement Primitive Diffusion (MPD) is a diffusion-based imitation learning method for high-quality robotic motion generation that focuses on gentle manipulation of deformable objects.

more

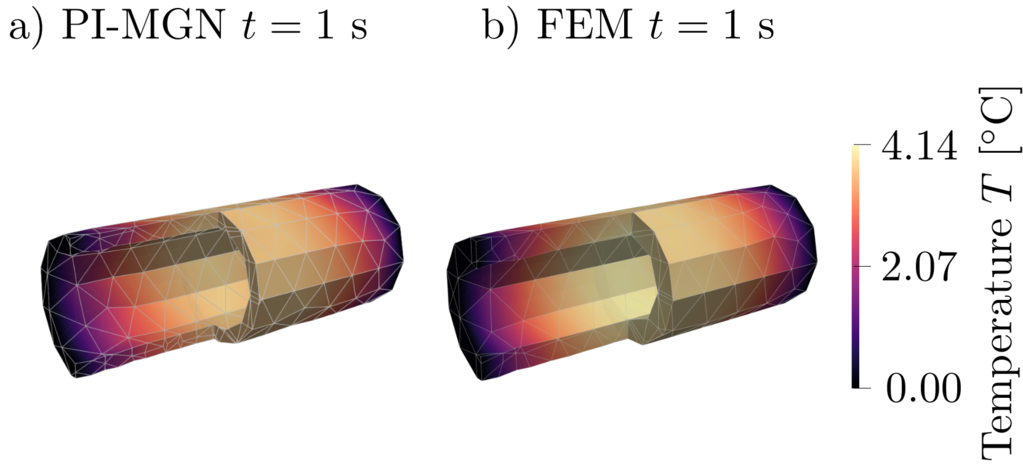

We combine Graph Neural Networks with Physics-Informed training to learn complex physical behavior on arbitrary problem domains without the need for explicit training data. We evaluate our approach on linear and nonlinear simulations in 2d and 3d, outperforming existing data-driven methods while showing strong generalization performance to unseen meshes during inference.

We introduce a novel benchmark for variational sampling methods. Specifically, we assess the latest sampling methods focusing on Variational Monte Carlo methods, such as diffusion-based sampling methods. We analyze strengths and weaknesses of different performance criteria with a specific focus on quantifying mode collapse. Furthermore, we introduce a novel heuristic approach for quantifying mode-collapse.

more

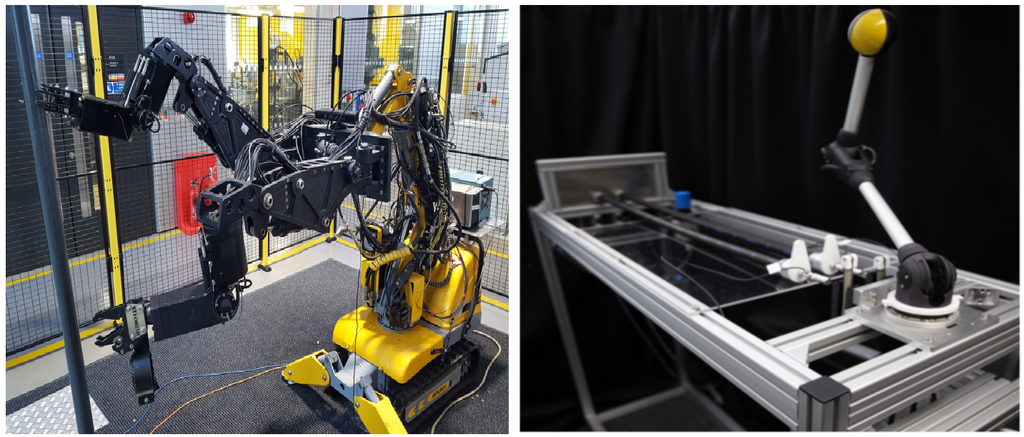

In this paper, we conducted a comprehensive user study to compare five different robot control interfaces for robot learning. This study reveals valuable insights into their usability and effectiveness. The result shows that the proposed Kinesthetic Teaching interface significantly outperforms other interfaces in both objective and subjective metrics based on success rate, task completeness, and completion time and User Experience Questionnaires (UEQ+).

more

Now you can find us on the 4th floor of the InformatiKOM I, Building 50.19, 76131 Karlsruhe.

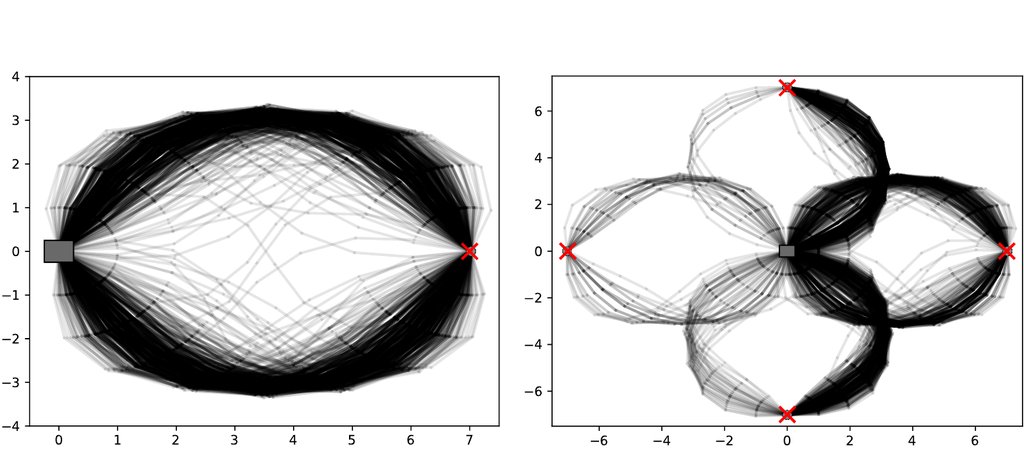

We introduce simulation benchmark environments and the corresponding Datasets with Diverse human Demonstrations for Imitation Learning (D3IL), designed explicitly to evaluate a model's ability to learn multi-modal behavior

more

We propose a novel RL framework that integrates step-based information into the policy updates of Episodic RL, while preserving the broad exploration scope, movement correlation modeling and trajectory smoothness.

more

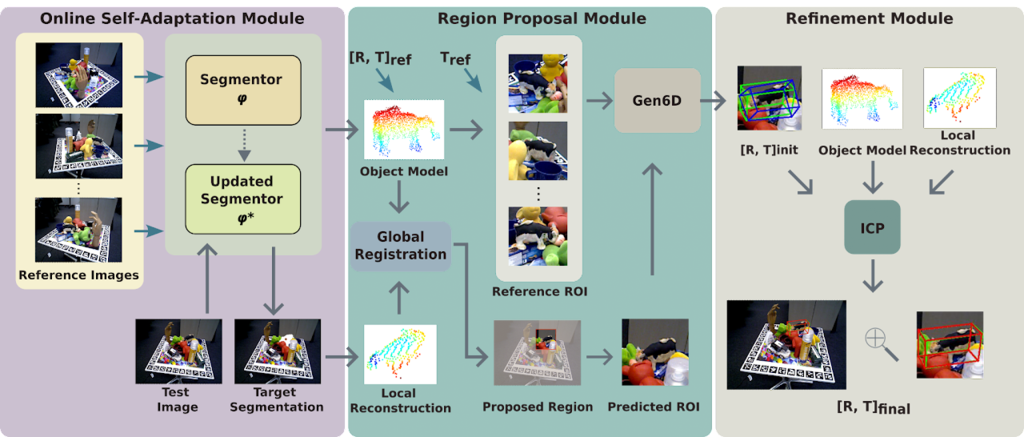

Interested in 6D pose estimation of novel objects w/o mesh models and gt-masks from clutter scenes? Check out our #CORL2023 paper "SA6D: Self-Adaptive Few-Shot 6D Pose Estimator for Novel and Occluded Objects"

more

Need a principled foundational formalism for designing hierarchical world models? Check out our new paper, where we propose a probabilistic formalism and neural network architecture for learning world models at multiple temporal abstractions/hierarchies. These lightweight latent linear models can compete with state-of-the-art transformers in predictions and additionally quantify uncertainties.

more

Imitation learning from diverse human demonstrations can lead to multimodal data distributions. We propose a new algorithm that combines curriculum learning and mixture of experts policies to avoid mode-averaging and learn diverse behavior.

more

Feel the "tingle" of optimized simulations with our novel algorithm, Autonomous Sensory Meridian Response Adaptive Swarm Mesh Refinement (ASMR). By using a network of intelligent agents within the mesh, our method dramatically improves both computational speed and accuracy. ASMR offers scalable and efficient refinements, beats common baselines and performs on par with expensive error-based strategies, while operating far more efficiently.

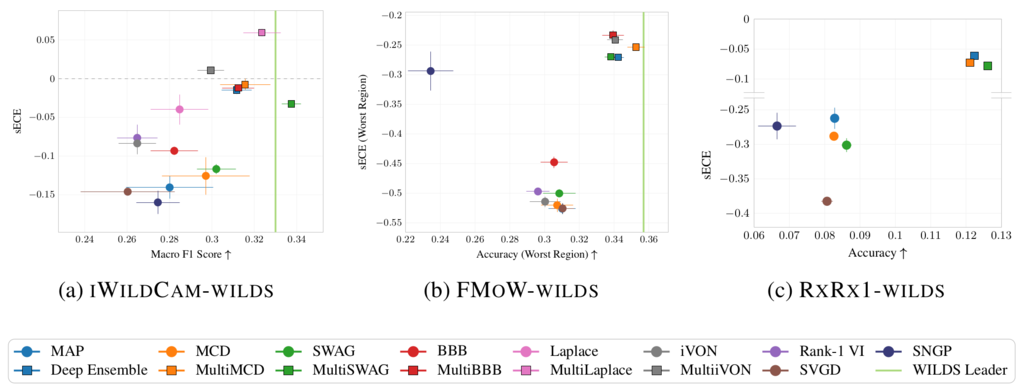

Bayesian deep learning (BDL) offers better-calibrated predictions on distribution-shifted data. We present a large-scale survey to assess modern BDL techniques using the WILDS collection's real-world datasets, emphasizing their ability to generalize and calibrate under distribution shifts across diverse neural network architectures. Our study includes the first systematic BDL evaluation for finetuning large pre-trained models, a novel calibration metric that allows distinguishing over- from underconfident predictions, and a wide range of convolutional and transformer-based neural networks. Interestingly, ensembling single-mode approximations typically enhances the models' generalization and calibration. Yet, a challenge arises when finetuning large transformer models. In such cases, "Bayes By Backprop" excels in accuracy, while SWAG achieves the best calibration.

more

Variational inference with Gaussian mixture models (GMMs) enables learning of highly tractable yet multi-modal approximations of intractable target distributions with up to a few hundred dimensions. The two currently most eff ective methods for GMM-based variational inference, VIPS and iBayes-GMM, both employ independent natural gradient updates for the individual components and their weights. We identify several design choices that distinguish both approaches and test all possible combinations. We identify a new combination of algorithmic choices that yield more accurate solutions with less updates then previous methods.

more

We introduce a novel deep reinforcement learning (RL) approach called Movement Primitive- based Planning Policy (MP3). By integrating movement primitives (MPs) into the deep RL framework, MP3 enables the generation of smooth trajectories throughout the whole learning process while effectively learning from sparse and non-Markovian rewards. Additionally, MP3 maintains the capability to adapt to changes in the environment during execution using the replanning capabilities of ProDMPs and outperforms other RL algorithms on competitive benchmark tasks.

more

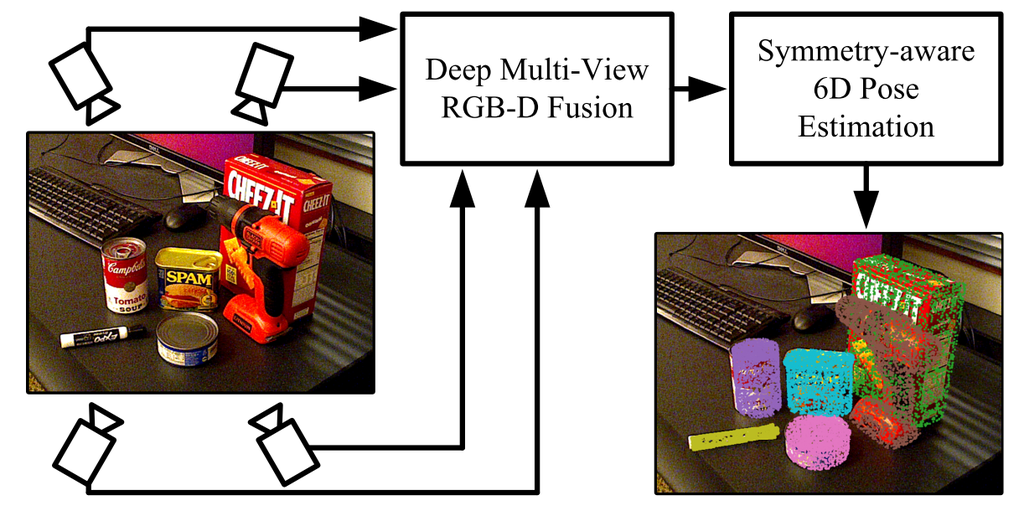

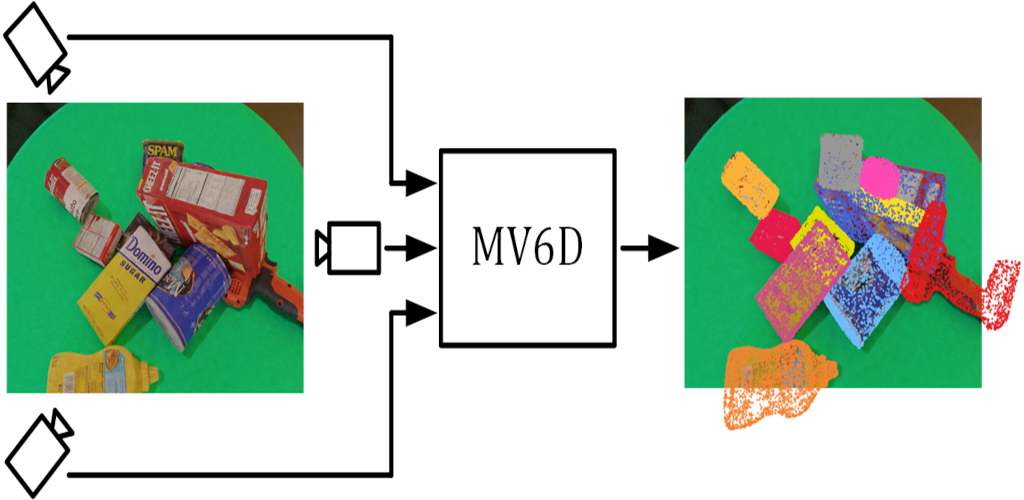

We propose a new 6D pose estimation algorithm that infers the pose of multiple objects in a scene from multiple views using pointcloud and rgb information. Our method is based on key-point detectors and multi-directional fusion of rgb and point-cloud data obtained from multiple views. The keypoint detectors are extended to also work for symetric objects. Our approach achieves unprecedented performance on several public benchmark datasets.

more

The Neural Process (NP) is a prominent deep neural network-based BML architecture, which has shown remarkable results in recent years. Prior work studies a range of architectural modifications to boost performance, such as attentive computation paths or improved context aggregation schemes, while the influence of the VI scheme remains under-explored. GMM-NP does not require complex architectural modifications, resulting in a powerful, yet conceptually simple BML model, which outperforms the state of the art on a range of challenging experiments, highlighting its applicability to settings where data is scarce.

more

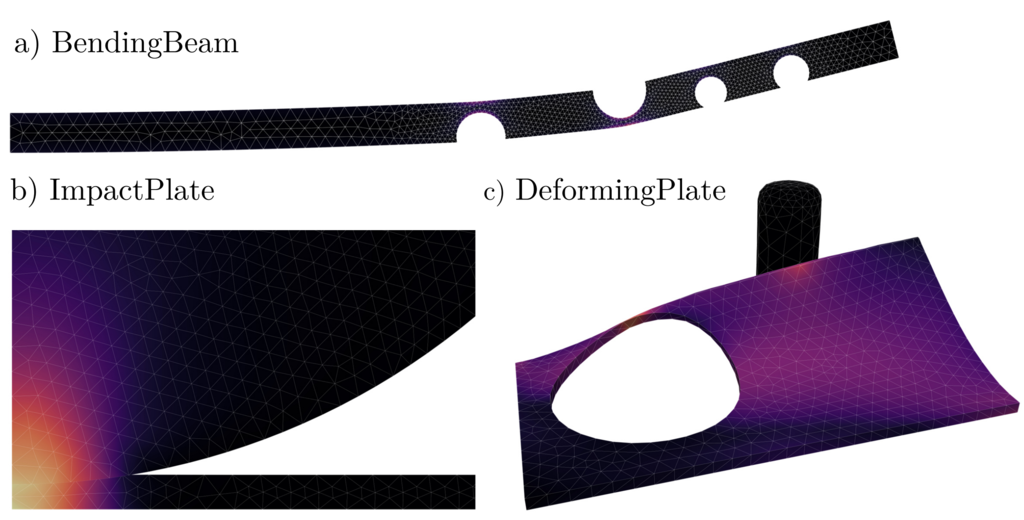

Physical simulations that accurately model reality are crucial for many engineering disciplines such as mechanical engineering and robotic motion planning. In recent years, learned Graph Network Simulators produced accurate mesh-based simulations while requiring only a fraction of the computational cost of traditional simulators.

more_rdax_98.png)

In this paper we propose a novel algorithm that extends Adversarial Imitation Learning to use preferences as a feedback besides demonstrations. Results show that our method can learn from expert and imperfect demonstrations. Experiments show the method's effectiveness on robotic ma benchmarks.

more

Movement Primitives (MPs) are a well-known concept to represent and generate modular trajectories. MPs can be broadly categorised into two types: (a) dynamics-based approaches that generate smooth trajectories from any initial state, e. g., Dynamic Movement Primitives (DMPs), and (b) probabilistic approaches that capture higher-order statistics of the motion, e. g., Probabilistic Movement Primitives (ProMPs).

more_neu_rdax_98.png)

We combine a mixture of movement primitives with a distribution matching objective to learn versatile behaviors that match the expert’s behavior and versatility.

more

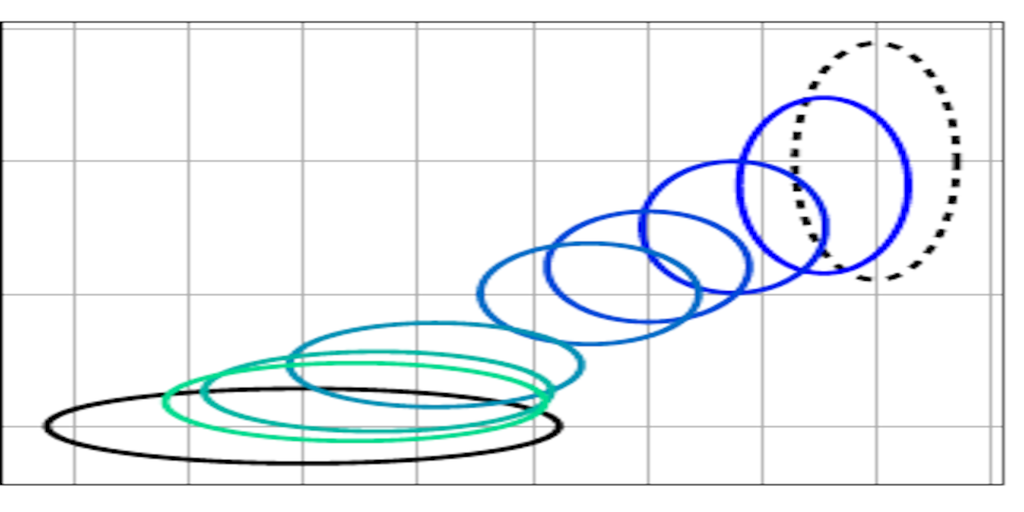

We study how recent State Space modeling approaches for Model-Based RL represent uncertainties. We find some flaws and propose a theoretically better-grounded alternative. We show it improves performance in tasks where it is important to appropriately capture uncertainty. If you want to know who this relates to the cat and the hamster you have to read the paper.

more

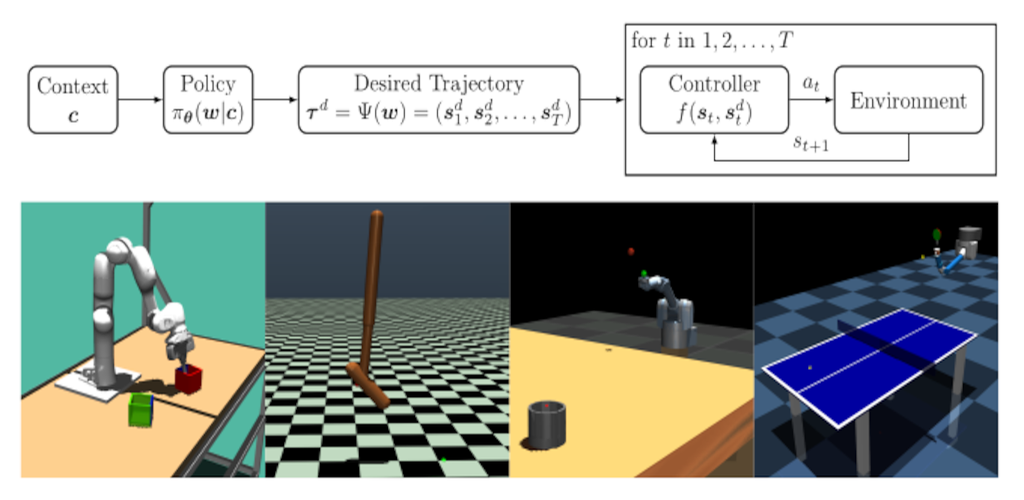

Episode-based reinforcement learning (ERL) algorithms treat reinforcement learning (RL) as a black-box optimization problem where we learn to select a parameter vector of a controller, often represented as a movement primitive, for a given task descriptor called a context.

more

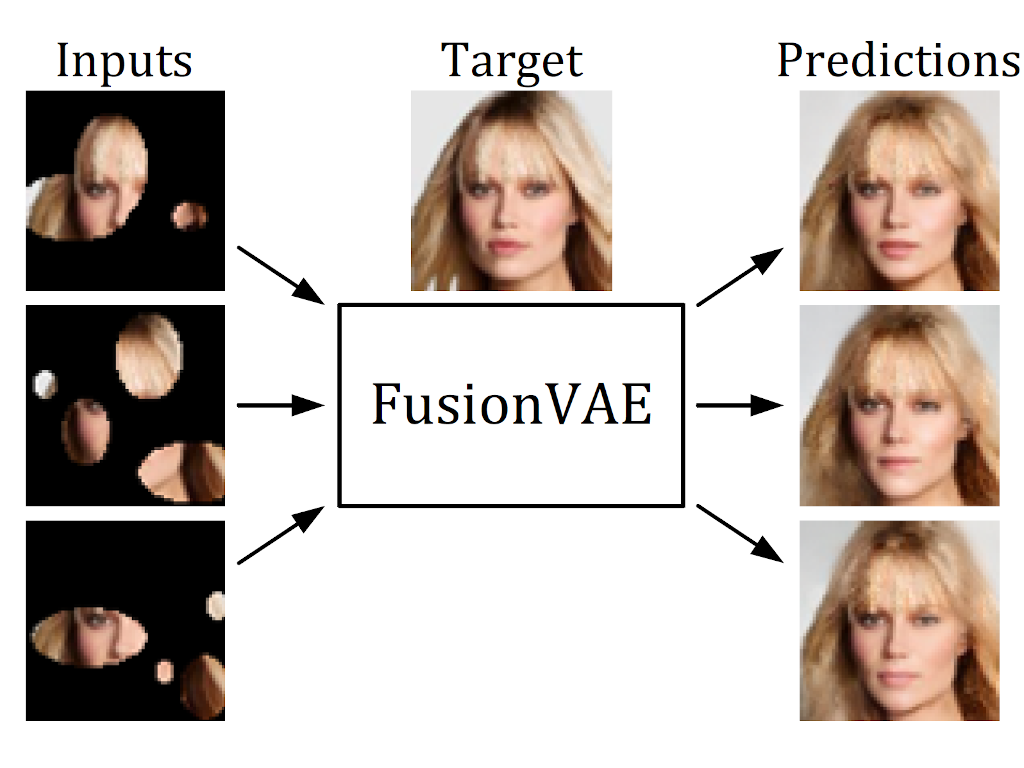

We present a novel deep hierarchical variational autoencoder that can serve as a basis for many fusion tasks. It can generate diverse image samples that are conditioned on multiple noisy, occluded, or only partially visible input images. We created three novel image fusion datasets and show that our method outperforms traditional approaches significantly.

more

We present a novel deep learning method that estimates the 6D poses of all objects in a cluttered scene based on multiple RGB-D images. Our approach is considerably more accurate than previous approaches especially for very occluded objects and it is robust towards dynamic camera setups as well as inaccurate camera calibration.

more

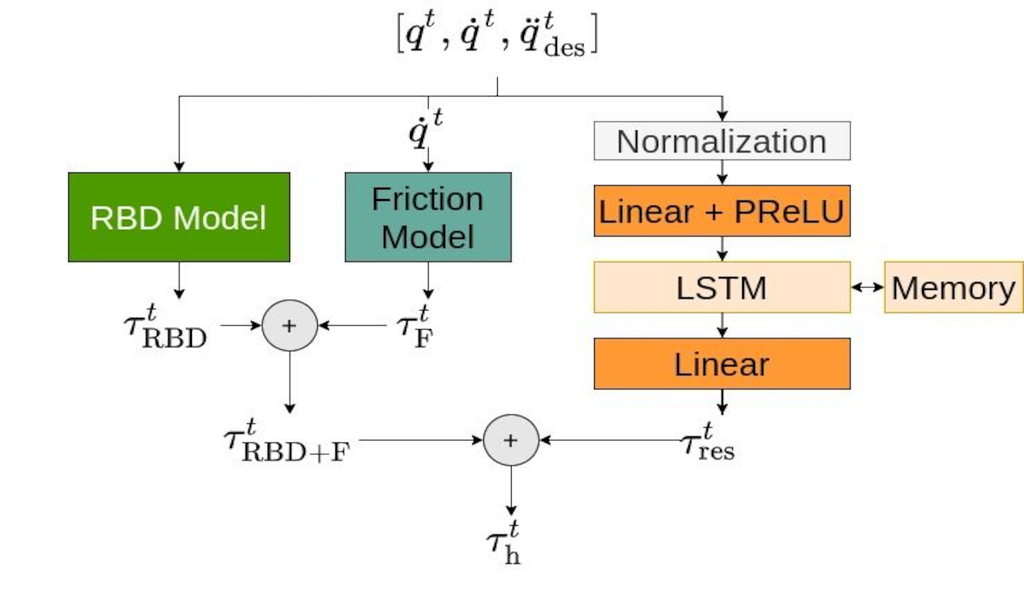

We propose a new formulation for a residual hybrid inverse dynamics model, which combines a fully physically consistent rigid-body dynamics model with a recurrent LSTM and a Coulomb friction function. The model is trained end-to-end using a new formulation of Barycentric Parameters called “Differentiable Barycentric”, which implicitly guarantees all conditions of physical consistency. In our real robot motion tracking experiments we show, that the new model is able to achieve compliant and precise motion tracking on unseen movements.

more

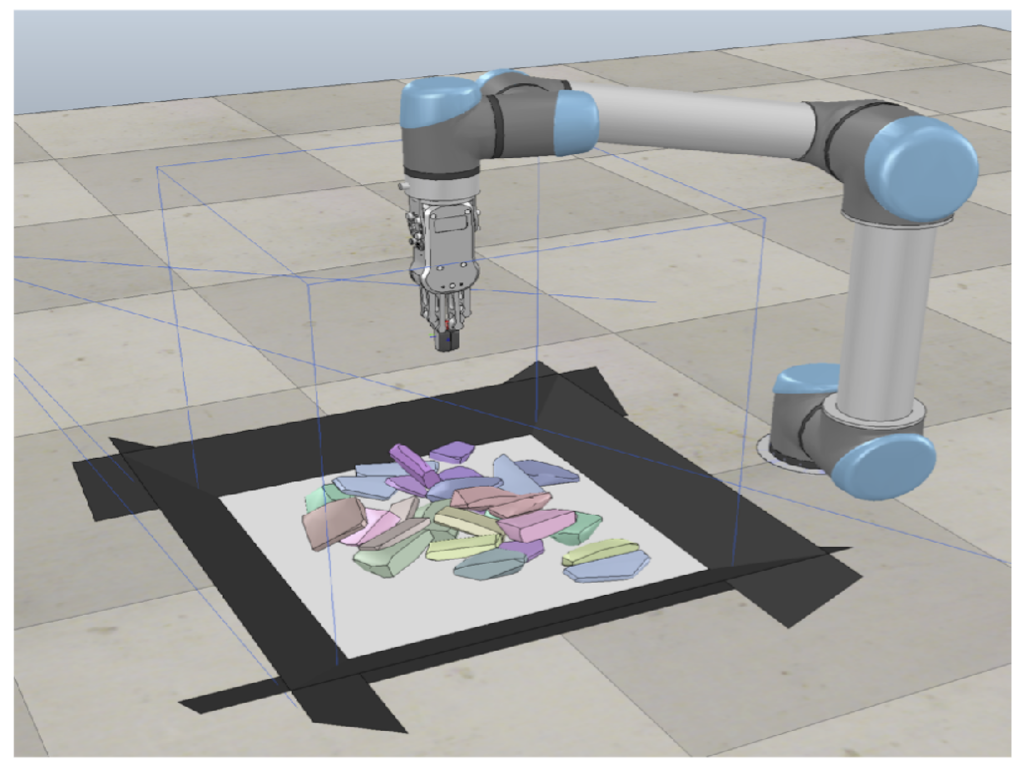

In this paper, we present a new approach for interactive scene segmentation using deep reinforcement learning. Our robot can learn to push objects in a heap such that semantic segmentation algorithms can detect every object in the heavily cluttered heap.

more

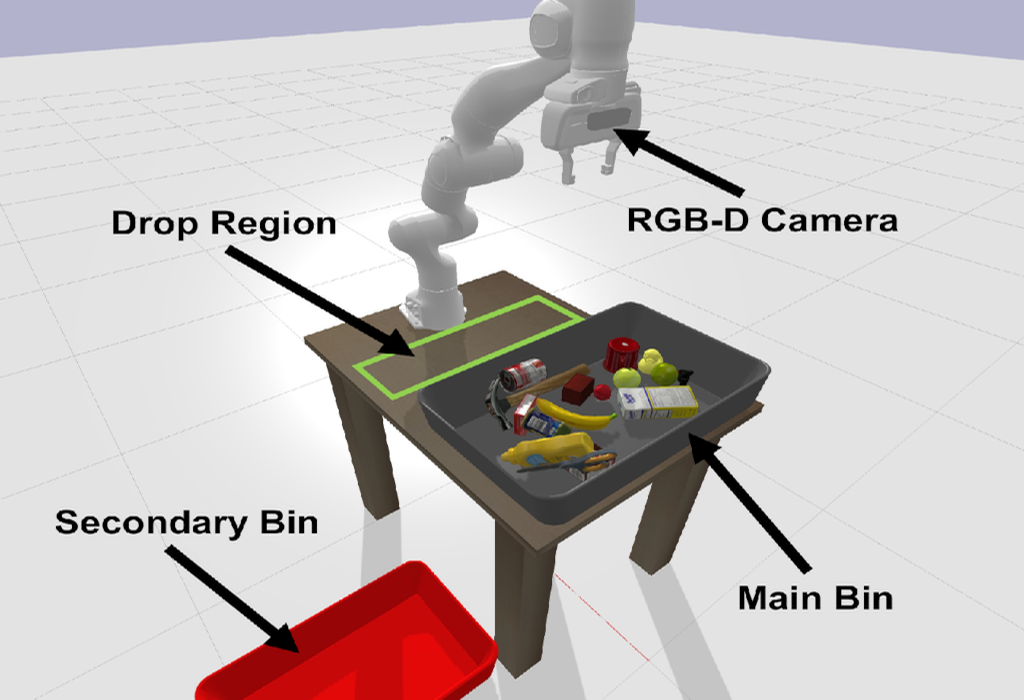

Mechanical Search (MS) is a framework for object retrieval, which uses a heuristic algorithm for pushing and rule-based algorithms for high-level planning. While rule-based policies profit from human intuition in how they work, they usually perform sub-optimally in many cases. We present am deep hierarchical reinforcement learning (RL) algorithm to perform this task, showing an increased search performance in terms of number of needed manipulation, success rate as well as computation time!

more

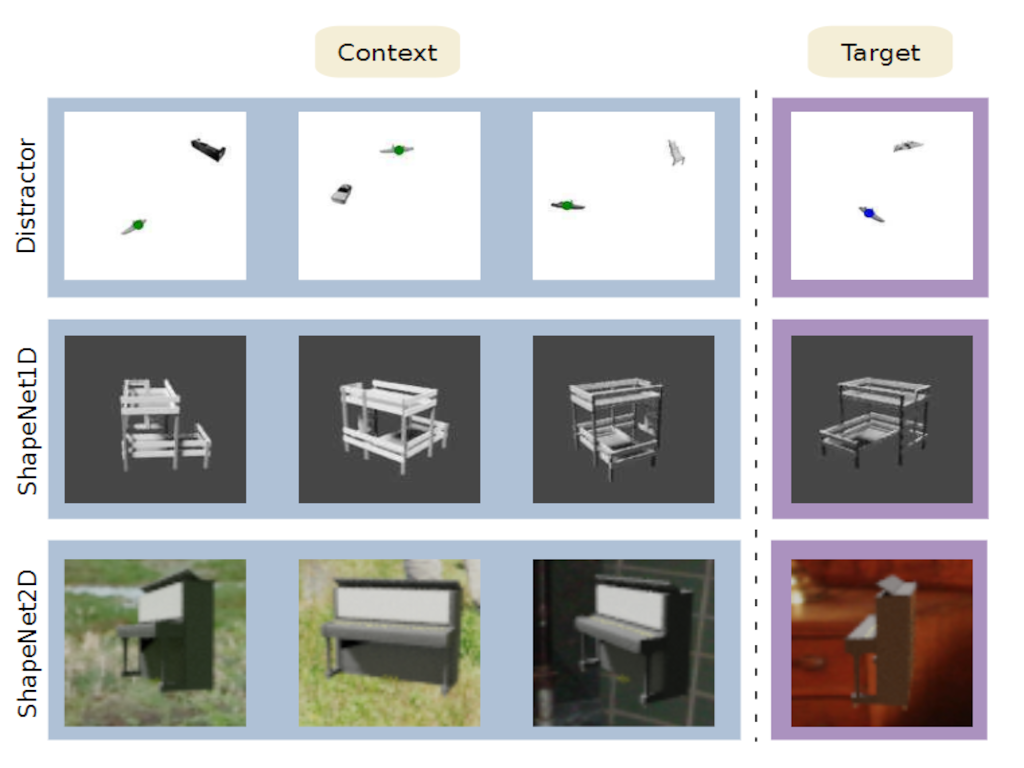

We design two new types of cross-category level vision regression tasks, namely object discovery and pose estimation, which are of unprecedented complexity in the meta-learning domain for computer vision with exhaustively evaluation of common meta-learning techniques to strengthen the generalization capability. Furthermore, we propose functional contrastive learning (FCL) over the task representations in Conditional Neural Processes (CNPs) and train in an end-to-end fashion.

more

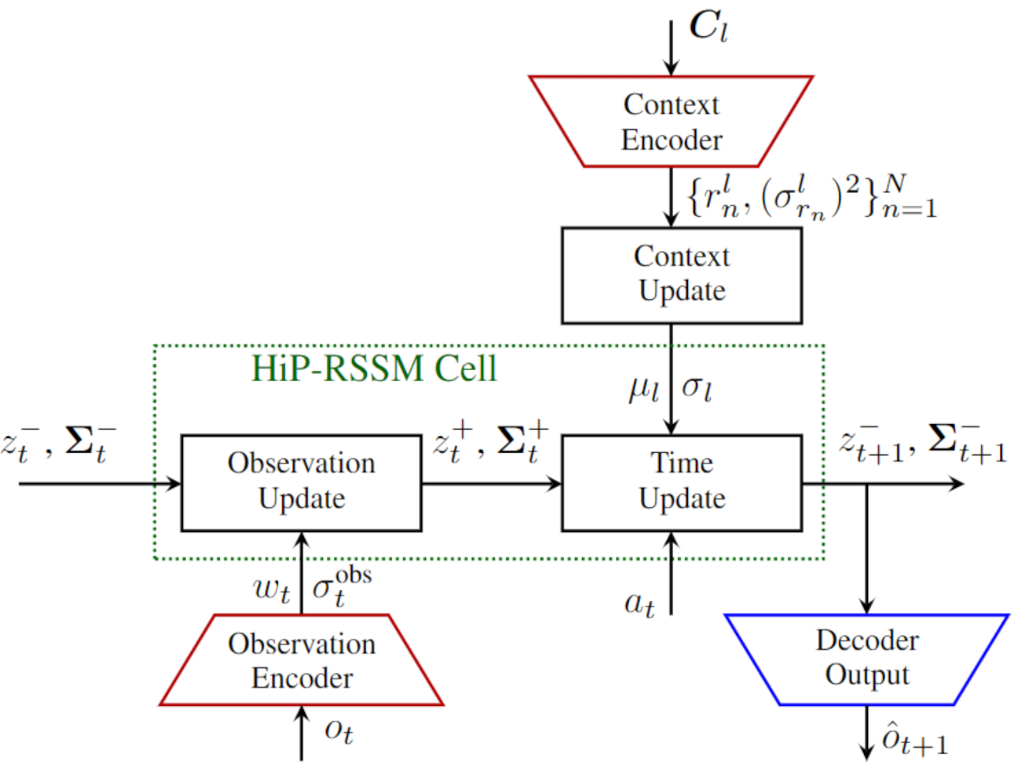

We propose a multi-task deep Kalman model, that can adapt to changing dynamics and environments. The model gives state of the art performance on several robotic benchmarks with non-stationarity with little computational overhead!!!

more

We propose a new method which enables robots to learn versatile and highly accurate skills in the contextual policy search setting by optimizing a mixture of experts model. We make use of Curriculum Learning, where the agent concentrates on local context regions it favors. Mathematical properties allow the algorithm to adjust the model complexity to find as many solutions as possible.

A video presenting our work can be found here.

more

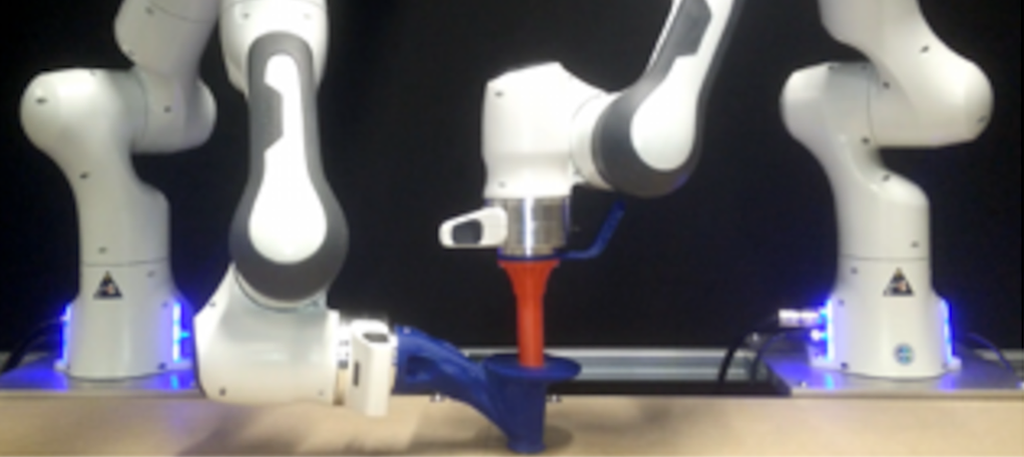

We developped a new residual reinforcement learning method that not just manipulated the output of a controller but also its input (e.g., the set-points). We applied this method to a real robot peg-in-the hole setup with a significant amount of position and orientation uncertainty.

more

Do you like Deep RL methods such as TRPO or PPO? Then you will also like this one! Our differentiable trust region layers can be used on top of any policy optimization algorithms such as policy gradients to obtain stable updates -- no approximations or implementation choices required :) Performance is enpar with PPO on simple exploration scenarios while we outperform PPO on more complex exploration environments.

Neural Processes are powerful tools for probabilistic meta-learning. Yet, they use rather basic aggregation methods, i.e. using a mean aggregator for the context, which does not give consistent uncertainty estimates and leads to poor prediction performance. Aggregating in a Bayesian way using Gaussian conditioning does a much better job !:)

Action conditional probabilistic model inspired by Kalman filter operations in the latent state. Find out how we learn the complex non-markovian dynamics of pneumatic soft robots and large hydraulic robots with this disentangled state and action representation.

more

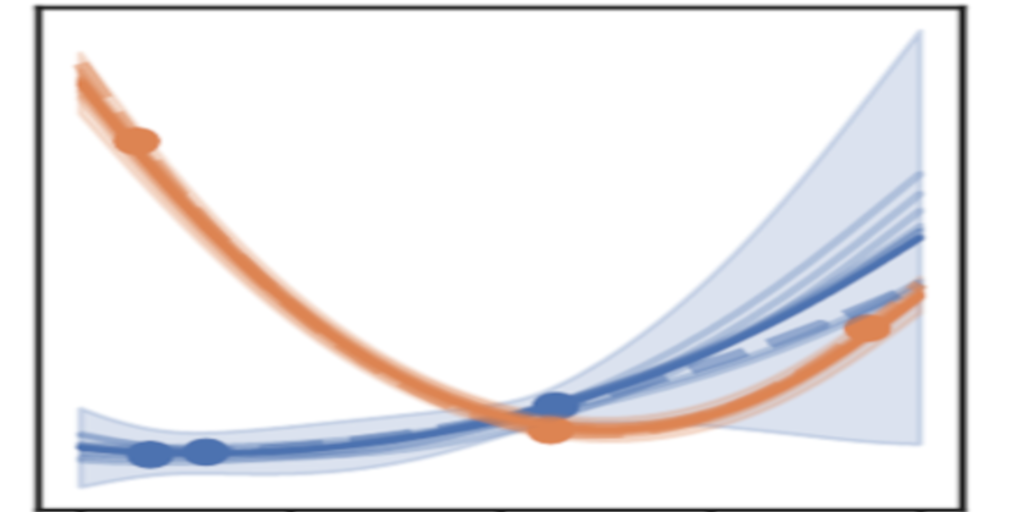

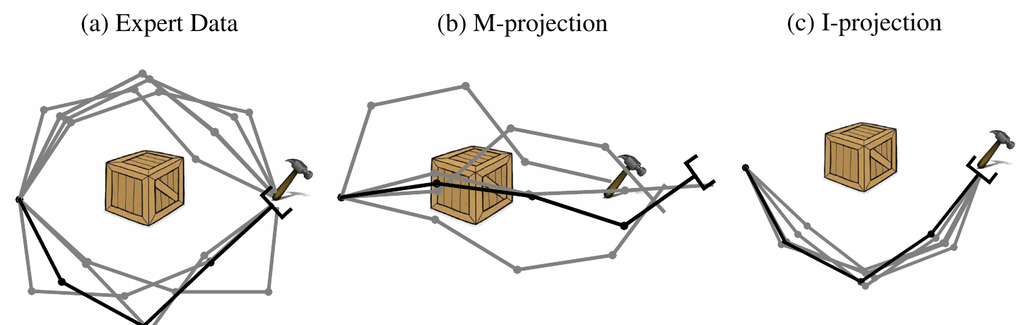

Many methods for machine learning rely on approximate inference from intractable probability distributions. Learning sufficiently accurate approximations requires a rich model family and careful exploration of the relevant modes of the target distribution...

more

Using the I-Projection for Mixture Density Estimation. Find out why maximum likelihood is not well suited for mixture density modelling and why you should use the I-projection instead.

more

The Autonomous Learning Robots (ALR) Lab was founded at Jan. 2020 at the KIT. The new group is now building up and looking forward to do exciting research and teaching!