New ICRA Paper: Hierarchical Policy Learning for Mechanical Search

-

Author:

Oussama Zenkri, Ngo Anh Vien, Gerhard Neumann

-

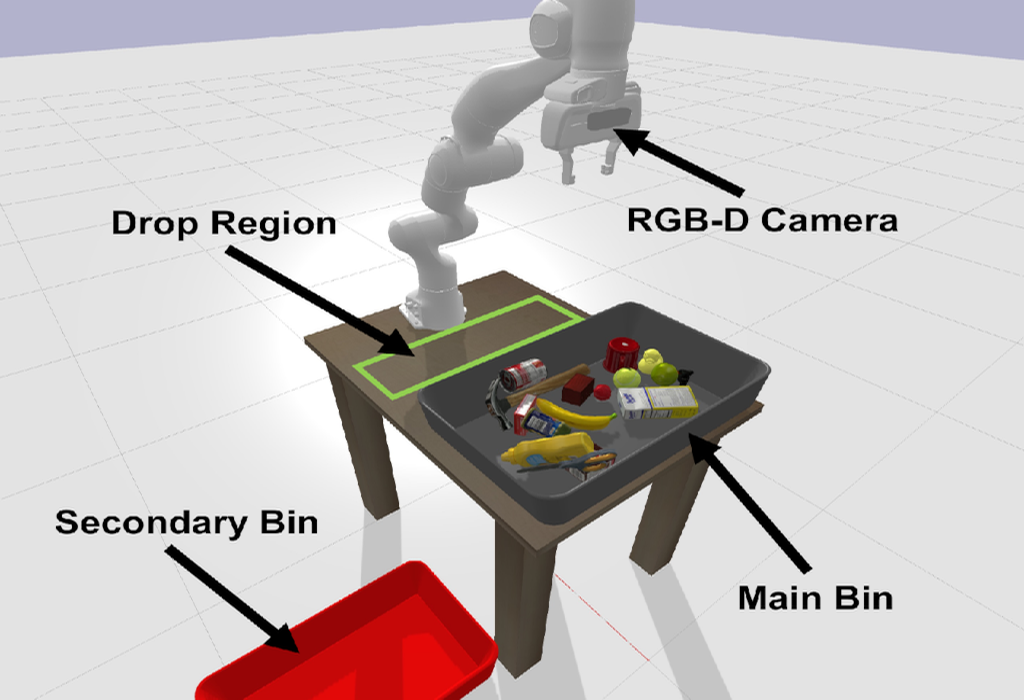

Retrieving objects from clutters is a complex task, which requires multiple interactions with the environment until the target object can be extracted. These interactions involve executing action primitives like grasping or pushing as well as setting priorities for the objects to manipulate and the actions to execute. Mechanical Search (MS) is a framework for object retrieval, which uses a heuristic algorithm for pushing and rule-based algorithms for high-level planning. While rule-based policies profit from human intuition in how they work, they usually perform sub-optimally in many cases. Deep reinforcement learning (RL) has shown great performance in complex tasks such as taking decisions through evaluating pixels, which makes it suitable for training policies in the context of object-retrieval. In this work, we first formulate the MS problem in a principled formulation as a hierarchical POMDP. Based on this formulation, we propose a hierarchical policy learning approach for the MS problem. For demonstration, we present two main parameterized sub-policies: a push policy and an action selection policy. When integrated into the hierarchical POMDP's policy, our proposed sub-policies increase the success rate of retrieving the target object from less than 32% to nearly 80%, while reducing the computation time for push actions from multiple seconds to less than 10 milliseconds.

Link: https://arxiv.org/abs/2202.13680

Video: https://youtu.be/cioawhgFiLU